%%capture

!pip install kornia

!pip install kornia-rsImage anti-alias with local features

Basic

HardNet

Patches

Local features

kornia.feature

In this example we will show the benefits of using anti-aliased patch extraction with kornia.

import io

import requests

def download_image(url: str, filename: str = "") -> str:

filename = url.split("/")[-1] if len(filename) == 0 else filename

# Download

bytesio = io.BytesIO(requests.get(url).content)

# Save file

with open(filename, "wb") as outfile:

outfile.write(bytesio.getbuffer())

return filename

url = "https://github.com/kornia/data/raw/main/drslump.jpg"

download_image(url)'drslump.jpg'First, lets load some image.

%matplotlib inline

import kornia as K

import kornia.feature as KF

import matplotlib.pyplot as plt

import torchdevice = torch.device("cpu")

img_original = K.io.load_image("drslump.jpg", K.io.ImageLoadType.RGB32, device=device)[None, ...]

plt.figure()

plt.imshow(K.tensor_to_image(img_original))

B, CH, H, W = img_original.shape

DOWNSAMPLE = 4

img_small = K.geometry.resize(img_original, (H // DOWNSAMPLE, W // DOWNSAMPLE), interpolation="area")

plt.figure()

plt.imshow(K.tensor_to_image(img_small))

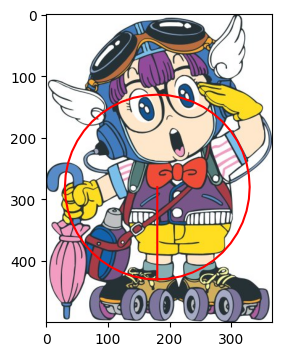

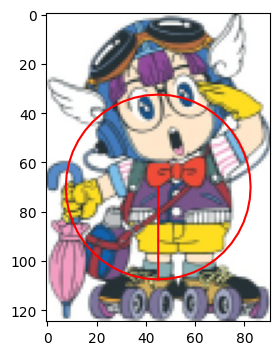

Now, lets define a keypoint with a large support region.

def show_lafs(img, lafs, idx=0, color="r", figsize=(10, 7)):

x, y = KF.laf.get_laf_pts_to_draw(lafs, idx)

plt.figure(figsize=figsize)

if isinstance(img, torch.Tensor):

img_show = K.tensor_to_image(img)

else:

img_show = img

plt.imshow(img_show)

plt.plot(x, y, color)

return

laf_orig = torch.tensor([[150.0, 0, 180], [0, 150, 280]]).float().view(1, 1, 2, 3)

laf_small = laf_orig / float(DOWNSAMPLE)

show_lafs(img_original, laf_orig, figsize=(6, 4))

show_lafs(img_small, laf_small, figsize=(6, 4))

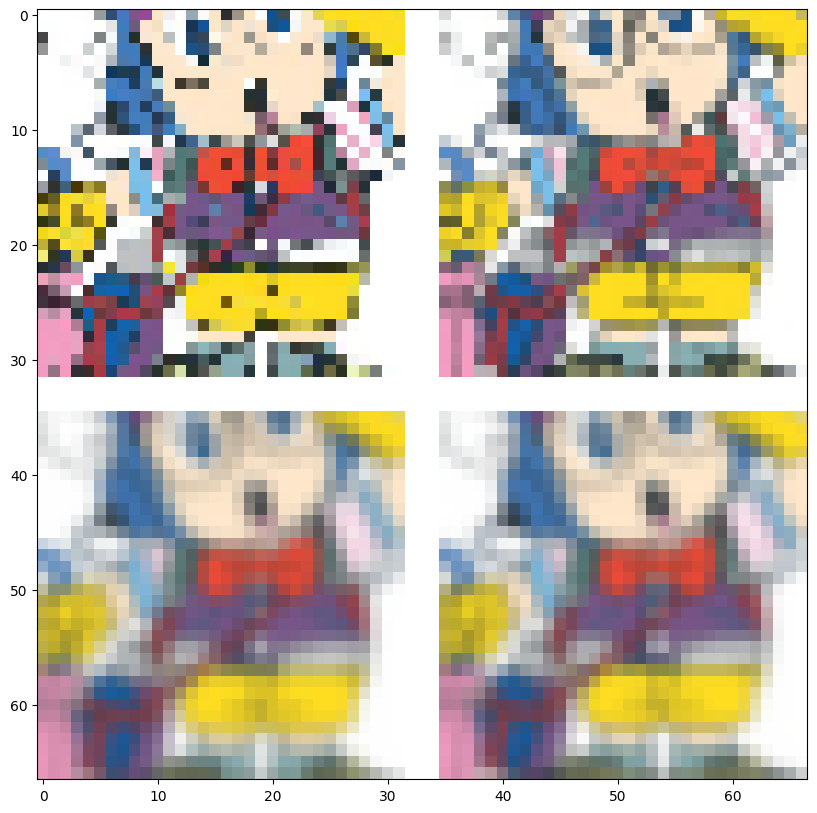

Now lets compare how extracted patch would look like when extracted in a naive way and from scale pyramid.

PS = 32

with torch.no_grad():

patches_pyr_orig = KF.extract_patches_from_pyramid(img_original, laf_orig.to(device), PS)

patches_simple_orig = KF.extract_patches_simple(img_original, laf_orig.to(device), PS)

patches_pyr_small = KF.extract_patches_from_pyramid(img_small, laf_small.to(device), PS)

patches_simple_small = KF.extract_patches_simple(img_small, laf_small.to(device), PS)

# Now we will glue all the patches together:

def vert_cat_with_margin(p1, p2, margin=3):

b, n, ch, h, w = p1.size()

return torch.cat([p1, torch.ones(b, n, ch, h, margin).to(device), p2], dim=4)

def horiz_cat_with_margin(p1, p2, margin=3):

b, n, ch, h, w = p1.size()

return torch.cat([p1, torch.ones(b, n, ch, margin, w).to(device), p2], dim=3)

patches_pyr = vert_cat_with_margin(patches_pyr_orig, patches_pyr_small)

patches_naive = vert_cat_with_margin(patches_simple_orig, patches_simple_small)

patches_all = horiz_cat_with_margin(patches_naive, patches_pyr)Now lets show the result. Top row is what you get if you are extracting patches without any antialiasing - note how the patches extracted from the images of different sizes differ.

Bottom row is patches, which are extracted from images of different sizes using a scale pyramid. They are not yet exactly the same, but the difference is much smaller.

plt.figure(figsize=(10, 10))

plt.imshow(K.tensor_to_image(patches_all[0, 0]))

Lets check how much it influences local descriptor performance such as HardNet

hardnet = KF.HardNet(True).eval()

all_patches = (

torch.cat([patches_pyr_orig, patches_pyr_small, patches_simple_orig, patches_simple_small], dim=0)

.squeeze(1)

.mean(dim=1, keepdim=True)

)

with torch.no_grad():

descs = hardnet(all_patches)

distances = torch.cdist(descs, descs)

print(distances.cpu().detach().numpy())[[0. 0.16867691 0.8070452 0.52112377]

[0.16867691 0. 0.7973113 0.48472866]

[0.8070452 0.7973113 0. 0.59267515]

[0.52112377 0.48472866 0.59267515 0. ]]So the descriptor difference between antialiased patches is 0.09 and between naively extracted – 0.44